You have probably heard the term, “misinformation” more than once. Whether in class, at home, in political speeches, or in the media itself, we seem to be living in what has been described as the golden age of misinformation, disinformation, fake news, and conspiracy theories. It’s undeniable that it’s never been easier to propagate false and inflammatory information. And, while, like me, you may be aware that misinformation exists, it’s hard to understand exactly how deeply embedded the media is in controlling the information available to society. The keyword here is media, with a particular focus on the social network giant, Facebook.

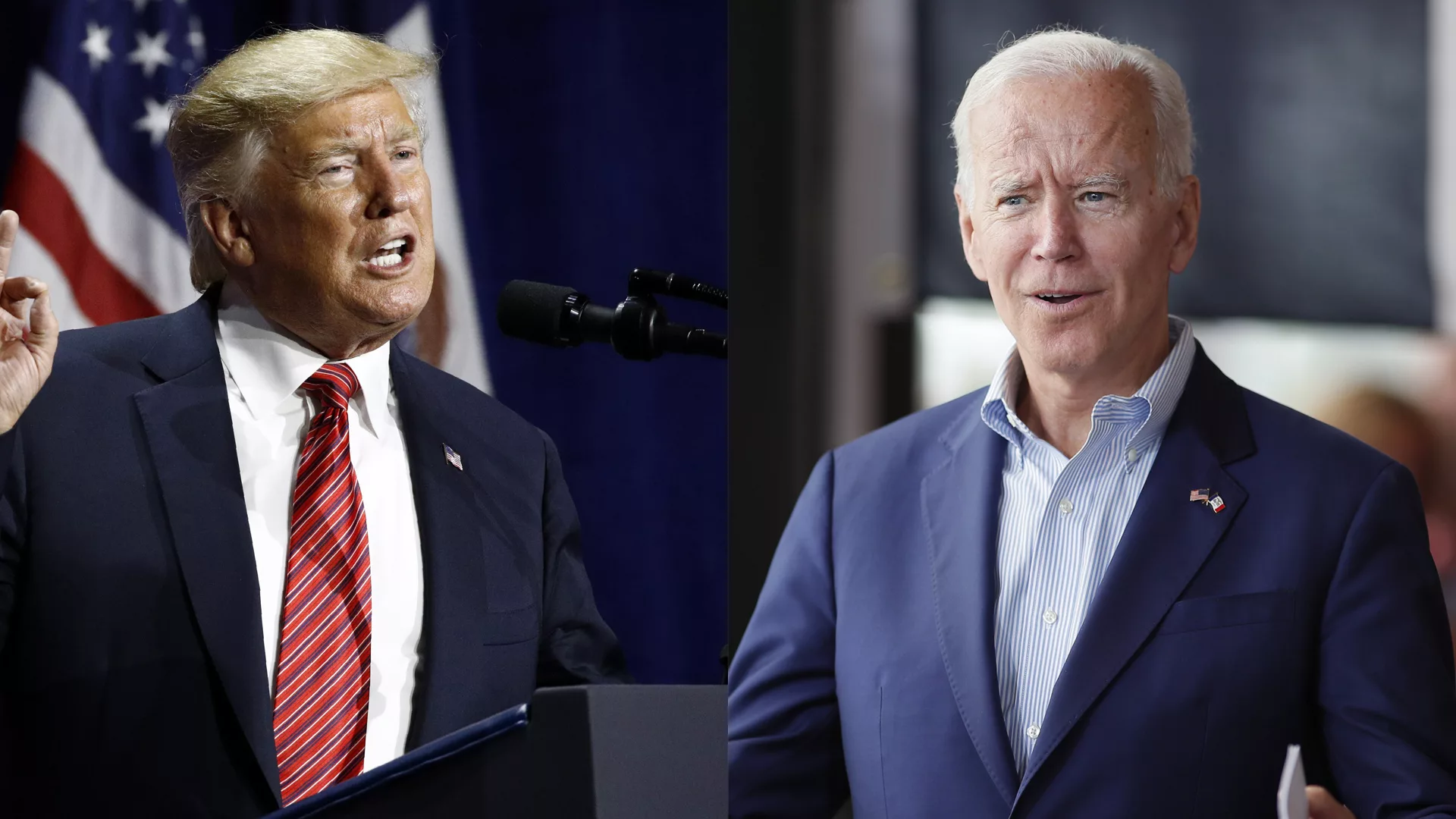

Currently, Facebook is facing its biggest credibility crisis, after a former employee, Francis Haugen became a whistleblower to the US Congress and the US Securities and Exchange Commission. Haugen was part of a two-year research team responsible for seeing the effects of Facebook products on users, specifically at preventing election interference. Having worked for four social networks within the Facebook umbrella, her position as an expert and insider makes her a powerful critic. Haugen has accused Facebook of knowingly causing public harm, and lying to its investors in the process. With her testimony, hundreds of internal Facebook documents have been leaked to prove the network played a role in deepening the spread of misinformation, particularly concerning the 2020 presidential election between Donald Trump and current president, Joe Biden. In order to picture the magnitude and severity of the issue, it’s important to note that this is the first time in history where a Justice Department investigation has been so entangled and preoccupied with a social media platform.

I knew Facebook was part of the problem, but it wasn’t until I listened to a podcast by news source Vox Conversations when my awareness became actual fear, and in which Facebook’s crisis became one worth following. The guest, Joe Bernstein (senior reporter at Buzzfeed) made the following comment: “There are certain interests which are invested in over-hyping disinformation. It’s good for business, and it helps deny the real roots of the issue.” While Haugen’s ongoing testimony is bringing Facebook to terms with the truth, holding them accountable for their role in “over-hyping disinformation” is long overdue. This is why we need Facebook to “own up” and to be transparent. Facebook must act ethically because of its ability to influence so easily and because of its looming presence on the internet. As shown by Haugen’s testimony, if Facebook lacks accountability and continues polarizing the content available to users, “it erodes our civic trust, and it erodes our ability to care for each other.”

The allegations towards Facebook are two-fold, but the real-life implications are many.

In 2018, the Cambridge Analytica case found Facebook in a delicate situation. The platform had been accused of using users’ data to help third parties manipulate the 2016 U.S. elections. Unfortunately, the past has come to haunt Facebook in the most recent American elections (2020). Haugen has accused Facebook of “failing to heed internal concerns over election misinformation.” The true shock comes at claims that Facebook was knowingly feeding misinformation to its users, and knowingly dismissing the issues flagged by its employees. According to the New York Times, a Facebook data scientist warned coworkers a few weeks before the 2020 elections that 10% of all views on political content in the U.S. were posts claiming the vote was fraudulent. This was proven by Facebook’s own internal study, as part of the documents leaked by Haugen. In another of the documents, Facebook researchers’ decided to create a fake profile under the name of Carol Smith. They gave the profile the characteristics of the average American conservative woman, stating that her interests included Donald Trump and Fox News. In just two days, Facebook’s algorithm was recommending that “Carol Smith ” join groups popular for feeding into conspiracy theories (such as Q-Anon).

With regards to the second accusation, Haugen stated that Facebook has worsened the mental health of teenage girls through its products and contents. Facebook’s own studies show that their algorithm was suggesting anorexia-related content, further damaging the body image of teenage girls in the UK and on the Instagram platform. As part of her testimony, she leaked a study where Facebook found that 17% of teenage girls in the UK said their eating disorders got worse after using Instagram for a prolonged time.

Haugen argues the algorithm is at the root of the issue, but Facebook lacks the intent to make it safer for users.

How does the algorithm fit into the Facebook crisis? Short and simple, the algorithm produces real-life effects that can disrupt society. Right away, we see the problem of Facebook’s algorithm model: one based on virality, on increasing inflammatory content, and one where no matter how quickly one extreme page or group is shut down, it will have had a wide and irreversible reach.

To Facebook’s defense, Haugen claims they decided to tweak the algorithm to improve safety before the 2020 election. Once it was over, Haugen claims “they changed the settings back to what they were before, prioritizing growth over safety.” She even compared the change in settings to a feeling of “betrayal of democracy.” With the storm of the Capitol, and the use of Facebook in the planning, execution, and spread of the event (e.g: using Facebook to organize paramilitary gear to the Capitol), for example, it became clear that the platform is making it easier for authoritarianism to win online and in real-life.

Hence, while it is obvious that Facebook bears responsibility for both misinformation and triggering content in exchange for growth, more users, and more views, we often struggle to see why it isn’t as simple as changing the algorithm. The algorithm is an issue for Facebook that can be translated into terms of how much money they will make. For a company that reported a net income of US$29 billion, changing the algorithm to make it safer and less tailored to people means they will spend less time on the site. In turn, they will be exposed to fewer advertisements, and Facebook’s revenues will lower.

The next consideration of the algorithm comes from Haugen’s testimony itself: “During my time at Facebook, I came to realize a devastating truth: Almost no one outside of Facebook knows what happens inside Facebook.” Let’s reflect. How much do you really know about the algorithm? How do you know if your feed on Facebook has true information? Why do we need so many ads?

Truth be told, we cannot answer these questions because we cannot understand the choices made by Facebook. More importantly, the regulating authorities of the government do not have a complete understanding of Facebook’s actions. In her testimony, Haugen argues Facebook has been hiding its research from the government, investors, and public scrutiny. She alleges Facebook wants users to believe they must choose between complete freedom of speech or the way Facebook is currently operating, painting the issues it’s facing as unsolvable. Rather than conform, Haugen suggests that users should expect greater visibility on how the platform works, and expect “nothing less than full transparency.” By agreeing with Facebook that its issues are “unsolvable,” we ignore the fact that Facebook does have the resources and sophisticated understanding of the measures it could take to make the platform safer, without censoring users in the process. It just chooses not to.

Rebranding Facebook does not mean these problems go away.

Recently, Facebook CEO, Mark Zuckerberg, has announced the rebranding of the platform into “Meta.” Meta will oversee Facebook itself, along with other platforms such as Messenger, Instagram, Whatsapp, and Oculus. Essentially, Facebook wants to build a “Metaverse,” or virtual spaces where users will be able to create, socialize, play, and buy from each other beyond the social networking apps. Even if this rebranding was a long-term plan for Facebook, it could not come at a worse time.

Changing the name of the platform from Facebook to Meta will not disassociate the company from the controversy surrounding Haugen’s testimony. Meta will still be managed by the same company, with the same business values behind it. The rebranding has been subject to online ridicule, with U.S. political scientist Matt Blaze sarcastically comparing the same effects of renaming Facebook to Meta with the transformation of “torture” into “enhanced interrogation” by the CIA. Pretty on paper, yet no impact in how to go about things in real-life. Instead, it seems to be that “Meta” is just a facade for a company whose moral compass is broken.

Crucial to this rebranding is the employees, who share the fears that “Meta” will have no effect on the general good of its users if Facebook’s internal mechanisms do not change as well. Documents leaked by Haugen show how Facebook workers knew of the platform’s problems with election misinformation and anorexic content, and warned their top branches. Facebook’s “very open work culture” allowed staffers to raise alarms and complaints by encouraging them to share their opinions, but it showed no follow-up. Facebook thus ignored the “substance and implications” of these complaints, despite the protest of employees. Overall, employees and the public must reflect on the reasons behind the need for such an immersive “Metaverse” if the issues so present and incubated in the current platform have not been fixed.

Ultimately, it comes down to transparency, making Facebook compatible with democratic values, and dismantling the algorithm.

The solutions proposed to Facebook’s current crisis vary. For Haugen, she believes Mark Zuckerberg (who holds 55% of all voting shares at Facebook) must accept a trade-off between public good and profit, and the company must claim “moral bankruptcy.” To U.S legislators, the solution is to push reforms of Section 230 of the U.S Communications Decency Act, which exempts social networks and media companies like Facebook from any liability of what is posted. This reform would allow private citizens to sue Facebook for any harm done, giving them some type of recourse. Others in the tech industry claim Facebook should make rules on how frequently users can post, and establish automatic features that cap the virality of a post or a group if it’s gaining online engagement at an absurd rate.

I view the issue as one central to the concept of “trust.” If Facebook were to open its algorithms to allow users to see how their feed is being tailored, we would trust the posts we see. Or, if Facebook rid itself of tailored algorithms to show users what is going viral in real-time, we would be open to all types of content. Perhaps diminishing the concept of “virality” is a necessary step, although this alone is another debate. Like Haugen, I believe Facebook has the money and people to invest into “human content moderation,” that is, using people rather than artificial intelligence to manage the content being generated, posted, and shared. Facebook deserves a chance to be seen as a force for good and as a platform we can rely on.

Nevertheless, I want to leave you with one last note: How far are we, the public, to blame? We are not passive users either, as many of us look to social media, like Facebook, as our only source of information. We complain about social media, but we also spend hours combing through our networks and working on our “ideal” feed. The algorithm rewards engagement, so when we interact on platforms like Facebook, we are actively making decisions on what to like, comment, dislike, follow and unfollow. Must we mistrust more than trust the content we see from this point forward? Sadly, if we do want to be the most vigilant users on the platform, this seems to be the looming scenario unless Facebook does undergo a serious change.